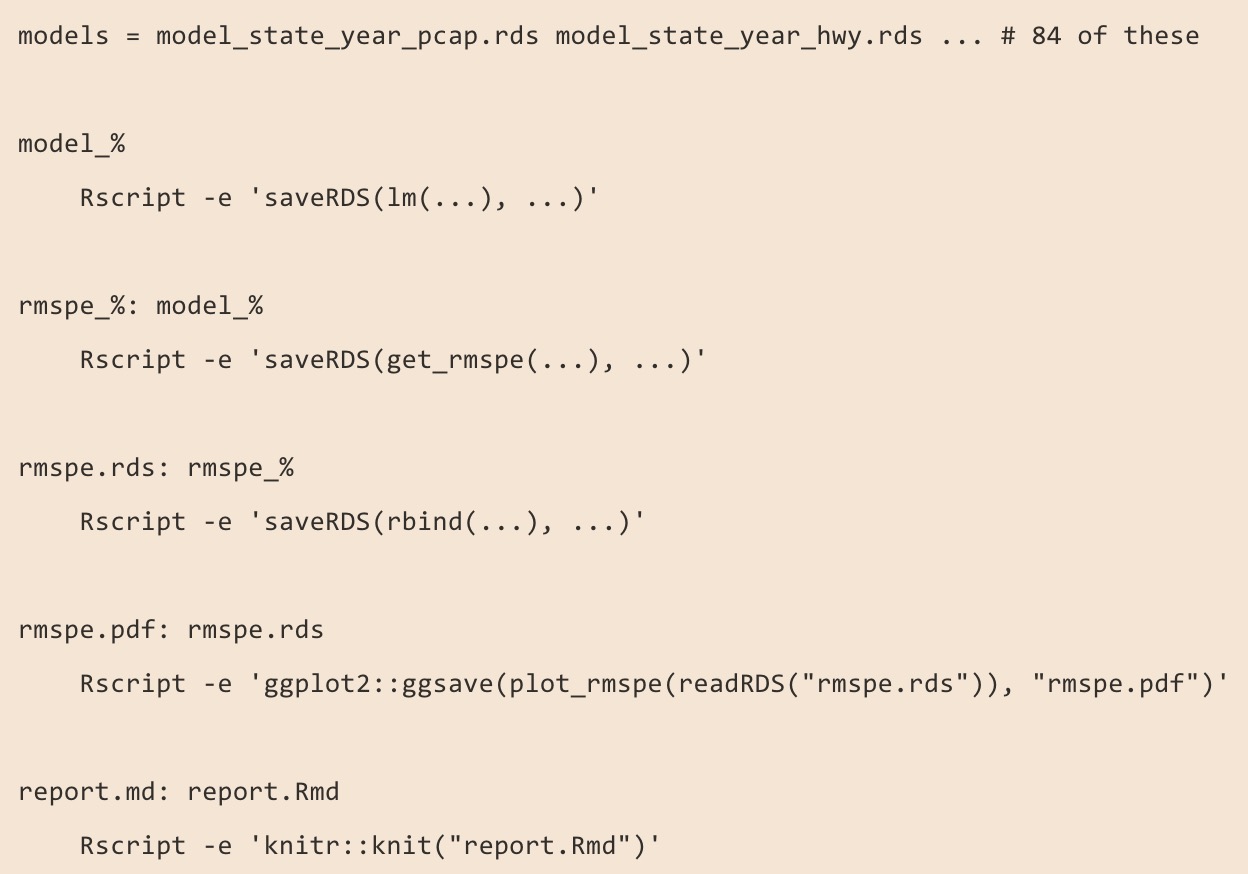

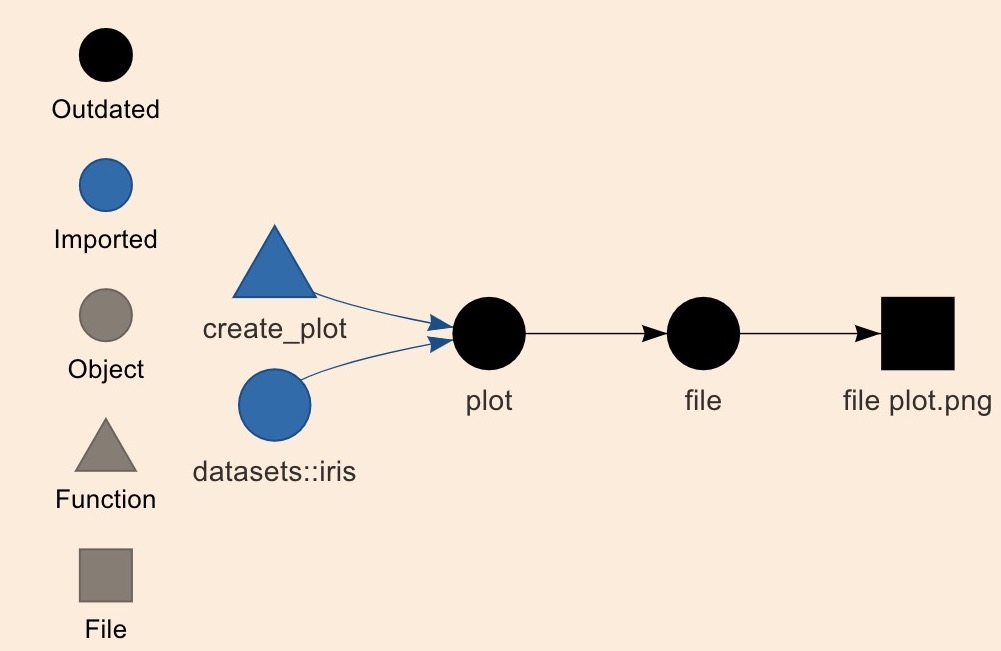

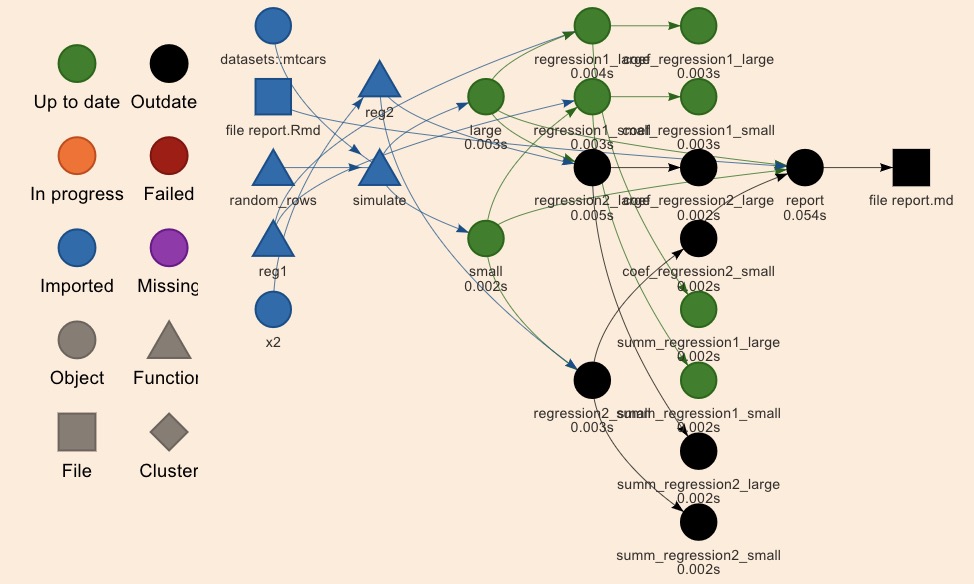

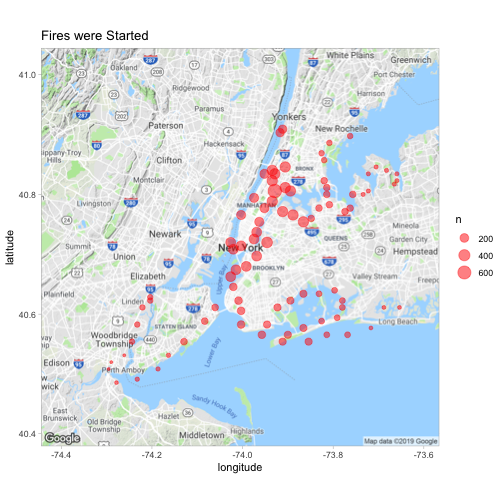

class: center, middle, inverse, title-slide # <code>[drake](https://github.com/ropensci/drake)</code> for Workflow Happiness in R ### <code>Amanda Dobbyn</code> --- # **Warning** -- ### This presentation contains less rap than you might have expected. -- <br> .center[I won't blame you if you want to make a quick getaway.] -- <p align="center"> <img src="https://media.giphy.com/media/11gC4odpiRKuha/giphy.gif" height="300px"> </p> <!-- .center[] --> --- class: inverse ## Quick About Me <br> .left-column[ **Day job**: ultimate frisbee player **For fun**: Data Scientist at [Earlybird Software](http://www.earlybird.co/), former co-organizer of [R-Ladies Chicago](https://rladieschicago.org/) <!-- .pull-left[] --> **GitHub**: [@aedobbyn](https://github.com/aedobbyn) **Website**: https://dobb.ae **Twitter**: [@dobbleobble](https://twitter.com/dobbleobble) ] .right-column[] --- ## The Plan <br> 1) I'll give an intro to what `drake` is and how it works. -- <br> 2) We'll switch to a [live coding Rmd](https://github.com/aedobbyn/nyc-fires/blob/master/live_code.md) which hopefully won't totally break 🤞 -- <br> In that part, we'll use the Twitter and Google Maps geocoding APIs to run a `drake` pipeline. -- <br> <br> <br> <br> <br> All code and slides on [GitHub](https://github.com/aedobbyn/nyc-fires). --- ## `drake`'s Main Idea -- [`drake`](https://github.com/ropensci/drake) is workflow manager for your R code. -- In a complex analysis pipeline, it makes changing your code easier. -- <br> `drake` loves changes. -- <p align="left"> <img src="https://media.giphy.com/media/JFawGLFMCJNDi/giphy.gif" alt="ilovechanges" height="300px"> </p> --- ## `drake`'s Main Idea -- When something changes that makes the most recent results **out-of-date**, `drake` rebuilds *only* things that need to be rebuilt, so that -- *what gets done stays done*. <p align="left" style="padding-right: 20%;"> <img src="./img/drake_pitch.svg" height="300px"> </p> -- Created and maintained by [Will](https://twitter.com/wmlandau) [Landau](https://github.com/wlandau) and friends. --- ## What's the deal with the name? -- **`d`**`ataframes in` **`R`** `for` `M`**`ake`** <br> -- .pull-left[ [GNU Make](https://www.gnu.org/software/make/) is a tool that uses a file called a Makefile to specify **dependencies** in a pipeline. <br> `drake` implements that idea in a way that's more native to how we work in R. ] Example of a Makefile: .pull-right[] --- class: inverse ## Better Workflows <br> Does your analysis directory look like this? -- .pull-left[ `01_import.R` `02_clean.R` `03_deep_clean.R` `04_join.R` `05_analyze.R` `06_analyze_more.R` `07_report.Rmd` ] -- .pull-right[ <br> #### What's bad about this? <br> **It doesn't scale well** <br> Which you know if you've tried to add another intermediate step or reorganize your subdirectories. ] --- #### Your pipeline depends on -- - You keeping file names up-to-date and sourcing things in the right order -- - You knowing when the input data changes -- - You knowing which objects and functions are used by which other objects and functions <!-- - Explicitly saving intermediate data representations --> -- <br> #### If something breaks -- - Can you be sure about where it broke? -- - Do you know which intermediate data stores are up to date? -- - Do you need to re-run the entire pipeline again? -- .pull-right[ <p align="right"> <img src="./img/tired_drake.jpeg"> </p> ] --- ## Nice features of `drake` .pull-left[ 1) Tidy **dataframe** shows how pieces in your pipeline fit together ] -- <br> .pull-right[ 2) **Dependency graph** of all inputs and outputs ] <br> -- .pull-left[ 3) Great for iteration and **reproducibility**, especially if used with git ] <br> -- .pull-right[ 4) Automated parallel and distributed computing ] <br> -- .pull-left[ 5) It's all in R, so no writing config files! 🎉 ] <!-- .pull-right[] --> <!-- .pull-right[] --> --- ## `drake` : `knitr` (Analogy stolen from Will's [interview on the R podcast](https://www.youtube.com/watch?v=eJQ29CLyDCs&feature=youtu.be&t=1533).) <p align="center"> <img src="./img/tiny_hats.jpg"> </p> -- 1) `knitr` can **cache** chunks if they've already been run, and nothing in them has changed. -- 2) A chunk successfully knitting **depends** on the previous chunk knitting and on any chunk that you specify a [`depedson`](https://twitter.com/drob/status/738786604731490304?lang=en) for. -- 3) Report lives in a single file, making that part reproducible and **compact**. ??? With knitr, you expect to be able to rerun someone's report from a single file. --- class: inverse ## `drake` : `knitr` But, `knitr` is a reporting tool, not a pipelining tool. -- <br> - You can't summon values from the `cache` in an interactive session <br> -- - If you're outsourcing preprocessing steps to R scripts outside the `Rmd`, we haven't solved any of the usual dependency issues from before <br> -- - `Rmd`s quickly get big and unweildy for serious pipelines --- ## Is this also kinda like memoising? -- Yes! -- But better. -- Memoising **caches the return value of a function for a given a set of arguments** -- <br> - If the function is called again with the *same* set of arguments, the value is pulled from the cache instead of recomputed - Saves time & resources 👍 -- <br> - In R implemented nicely in the [`memoise` package](https://github.com/r-lib/memoise) --- ## On Memoising The downside: **Memoising only applies to one function.** -- <br> What if a function upstream of the memoised function changes? We could get the wrong answer. -- <p align="center"> <img src="./img/sad_drake.jpeg" height="330px"> </p> --- ## On Memoising ```r add <- function(a, b) { a + b } add_and_square <- function(a, b) { add(a, b) ^ 2 } ``` -- <br> ```r add(2, 3) ## [1] 5 add_and_square(2, 3) ## [1] 25 ``` --- ## On Memoising If we've memoised `add_and_square`, ```r add_and_square <- memoise::memoise(add_and_square) ``` -- we return `add_and_square(2, 3)` from the cache. (Yay, fast!) ```r add_and_square(2, 3) ## [1] 25 ``` <br> -- **But** if we now redefine `add` so that it *subtracts* `b` from `a`... ```r add <- function(a, b) { * a - b } ``` -- What will happen when we call `add_and_square(2, 3)`? --- ## On Memoising -- We should get ```r add(2, 3) ^ 2 ## [1] 1 ``` -- But instead we return the old answer, which is now wrong: ```r add_and_square(2, 3) ## [1] 25 ``` -- <br> Luckily, `drake` knows the *all* the dependency relationships between functions as they relate to your targets. So, `drake` would know that the definition of `add` has changed, meaning that `add_and_square(2, 3)` needs to be recomputed. --- class: inverse ## A Few Pieces of `drake` Vocab <br> > **Targets** are the objects that drake generates; <br> -- > **Commands** are the pieces of R code that produce them. <br> -- > **Plans** wrap up the relationship between targets and commands into a workflow representation: a dataframe. <br> ??? one column for targets, and one column for their corresponding commands. --- ## More on Plans Plans are like that top-level script that runs your entire pipeline. <br> ```r source("01_import.R") source("02_clean.R") ... source("06_analyze_more.R") final <- do_more_things(object_in_env) write_out_my_results(final) ``` <br> *But*, a plan **knows about the dependencies** in your code. --- ## How to `drake` -- <br> 1) Store functions and any packages you need to load in a file `funs.R` -- 2) Store a `drake` **plan** in another file ```r plan <- drake_plan( cleaned_data = clean_my(raw_data), results = analyze_my(cleaned_data), report = report_out_my(results) ) ``` -- 3) **Run** the plan ```r make(plan) ``` --- ## What `drake` does -- ```r plan <- drake_plan( cleaned_data = clean_my(raw_data), results = analyze_my(cleaned_data), report = report_out_my(results) ) ``` -- `drake_plan` stores your plan as targets and commands in a dataframe. -- ```r plan ## # A tibble: 3 x 2 ## target command ## <chr> <chr> ## 1 cleaned_data clean_my(raw_data) ## 2 results analyze_my(cleaned_data) ## 3 report report_out_my(results) ``` --- ## What `drake` does ```r plan ## # A tibble: 3 x 2 ## target command ## <chr> <chr> ## 1 cleaned_data clean_my(raw_data) ## 2 results analyze_my(cleaned_data) ## 3 report report_out_my(results) ``` -- ```r make(plan) ``` -- **First run** of `make(plan)`: `drake` runs the plan from scratch -- <br> **Thereafter**: `drake` will only rebuild targets that are out of date, and everything downstream of them --- ## What makes a target become out of date? 1) A trigger is activated (more on these later) -- 2) Something used to generate that target *or one of its upstream targets* has changed -- ```r plan <- drake_plan( cleaned_data = clean_my(raw_data), * results = analyze_my(cleaned_data), report = report_out_my(results) ) ``` `drake` knows that `results` depends on the object `cleaned_data` and the function `analyze_my()` because those are both part of the command used to generate `results`. <br> -- **So, if `cleaned_data` changes or `analyze_my` changes, `results` is out of date.** --- ## Where is all this info stored? <br> #### **targets** -- In a hidden `.drake` directory, or cache, in your project's root. [More on storage.](https://ropensci.github.io/drake/articles/storage.html) -- <p align="left"> <img src="./img/drake_cache.jpg" height="180px"> <figcaption style="margin-left: 20%;">Spot the cache among the hidden dirs.</figcaption> </p> -- <br> `loadd()` loads targets from the cache into your R session. -- `clean()` cleans the cache. (You can recover a cache if you clean it by accident.) <br> --- ## Where is all this info stored? <br> #### **dependencies** -- `drake` **hashes** a target's dependencies to know when one of those dependencies changes -- <p align="left"> <img src="./img/drake_cache_hashes_small.jpg" height="150px"> <figcaption style="margin-left: 20%;">Inside the data subdir of the .drake cache</figcaption> </p> -- and creates a `config` list that stores a dependency graph (`igraph` object) of the plan along with a bunch of other things. -- You can access all of this with `drake_config()`. ??? You can check that the cache is there with `ls -a`. You have [control](https://ropensci.github.io/drake/articles/storage.html#hash-algorithms) over the hashing algorithm used, location of the cache, etc. --- class: inverse ## It's all about Functions `drake` is all built around *functions* rather than scripts. <br> -- - A plan works by using functions to create targets -- <br> - This allows `drake` to infer **dependencies** between - objects and functions - functions and other functions -- <br> - Running `drake_plan` creates a dataframe relating each target to the command used to generate it --- ## All about Functions ```r bad_plan <- drake_plan( first_target = source("import.R"), second_target = source("clean.R") ) ``` -- Sourcing files breaks the dependency structure that makes `drake` useful. -- <br> ```r source("all_my_funs.R") good_plan <- drake_plan( first_target = do_stuff(my_data), second_target = do_more_stuff(first_target) ) ``` Now `drake` knows`first_target` needs to be built before work on `second_target` can begin. --- ## `drake` things we won't get into - [Generate ~ big plans ~](https://ropensci.github.io/drake/articles/best-practices.html#generating-workflow-plan-data-frames) .center[] for analyses that require lots of different permutations of a certain analysis. (`drake` version 7.0.0 has a [new syntax](https://ropenscilabs.github.io/drake-manual/plans.html#create-large-plans-the-easy-way) that makes it easier to create them.) - Support for [debugging and testing ](https://ropenscilabs.github.io/drake-manual/debug.html) plans - Compatibility with [high performance computing](https://ropenscilabs.github.io/drake-manual/hpc.html) backends --- ## Moar Resources <br> - [`drake` user manual](https://ropenscilabs.github.io/drake-manual/index.html) <br> - [debugging drake](https://ropensci.github.io/drake/articles/debug.html) <br> - [Kirill Müller's cheat sheet](https://github.com/krlmlr/drake-sib-zurich/blob/master/cheat-sheet.pdf) <br> - [Sina Rüeger](https://sinarueeger.github.io/2018/10/09/workflow/) and [Christine Stawitz](https://github.com/cstawitz/RLadies_Sea_drake)'s `drake` presentations <br> - [Drake's Spotify station](https://open.spotify.com/artist/3TVXtAsR1Inumwj472S9r4) --- class: blue-light <!-- background-image: url("https://static01.nyt.com/images/2018/12/29/nyregion/28xp-explosion-sub-print/28xp-explosion-sub-facebookJumbo.jpg) --> ## Our Plan Remember the [crazy blue light](https://twitter.com/NYCFireWire/status/1078478369036165121) from late December? -- <p align="left" style="padding-right: 20%;"> <img src="./img/blue_light.jpg" height="350px"> </p> -- 😱 😱 😱 --- ## Our Plan .pull-right[] <br> <br> The Twitter account that let us know that this wasn't in fact aliens is [NYCFireWire](https://twitter.com/NYCFireWire). <br> Normally they just tweet out fires and their locations in a more or less predictable pattern: <br> <br> -- `<borough> ** <some numbers> ** <address> <description of fire>` -- <br> We can use their tweets to get some info on where and when fires happen in NYC. ??? I'll illustrate a way you might want to use `drake` with something that's close to home for us. What if we were constructing an analysis of these tweets and wanted to make sure our pipeline worked end-to-end, but didn't want to unnecessarily re-run outdated parts of it unless we needed to? --- ## The Pipeline 1. Pull in tweets, either the first big batch or any new ones that show up -- 2. Extract addresses from the tweets (🎶 regex time 🎶) -- 3. Send addresses to the Google Maps API to grab their latitudes and longitudes -- 4. Profit -- <br> All functions are defined in [`didnt_start_it.R`](https://github.com/aedobbyn/nyc-fires/blob/master/R/didnt_start_it.R), which we'll source in now. ```r source(here::here("R", "didnt_start_it.R")) ``` -- <br> **Caveats** This analysis relies on the [rtweet](https://github.com/mkearney/rtweet) and [ggmap](https://github.com/dkahle/ggmap) packages. To be able to run it in full you'll need a [Twitter API access token](https://rtweet.info/articles/auth.html) and [Google Maps Geocoding API key](https://developers.google.com/maps/documentation/geocoding/intro#Geocoding). --- ## Grabbing Tweets [`get_tweets`](https://github.com/aedobbyn/nyc-fires/blob/master/R/didnt_start_it.R) -- *Main idea*: * **Builds up a file** of the most recent set of tweets from a given account -- *Details*: - If neither file nor `tbl` is supplied as arguments, grabs an initial *seed* batch of tweets - If either is supplied, checks for new tweets and grabs them if any - Spits out the latest to the same file <br> ```r get_tweets(n_tweets_seed = 3) ## # A tibble: 3 x 5 ## text user_id status_id created_at screen_name ## <chr> <chr> <chr> <dttm> <chr> ## 1 Queens **99-75-5985*… 560024… 1090764990… 2019-01-30 19:13:33 NYCFireWire ## 2 Big fire in Bergen C… 560024… 1090762878… 2019-01-30 19:05:10 NYCFireWire ## 3 Blocking the hydrant… 560024… 1090739760… 2019-01-30 17:33:18 NYCFireWire ``` ??? - `get_seed_tweets` grabs a batch of tweets *or* reads in seed tweets from a file if the file exists - `get_more_tweets` checks if there are new tweets and, if so, pulls in the right number of them - `get_tweets` runs `get_seed_tweets` if given a null `tbl` argument, otherwise runs `get_more_tweets` --- ## Grabbing Seed Tweets A closer look at just the text of the tweets: ```r get_tweets(n_tweets_seed = 5) %>% select(text) %>% kable() ``` <table> <thead> <tr> <th style="text-align:left;"> text </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Queens **99-75-5985** 220-19 145th Avenue Near 221st Street, Battalion 54 reports all hands going to work for a basement fire in A Private Dwelling </td> </tr> <tr> <td style="text-align:left;"> Big fire in Bergen County, NJ. Old timber buildings, they just said the fire is making it's way to the Propane Filling station. </td> </tr> <tr> <td style="text-align:left;"> Blocking the hydrant is dangerous! https://t.co/IFNDVK41u3 </td> </tr> <tr> <td style="text-align:left;"> From NYC OEM: Snow Squall Warning in effect for NYC on 1/30 until 4 PM. Heavy and blowing snow with wind gusts up to 50 mph causing whiteout conditions, zero visibility and life-threatening travel conditions.... https://t.co/HlqrDX2UcX </td> </tr> <tr> <td style="text-align:left;"> Queens *99-75-9298* 60-15 Calloway St. Fire top floor 7 story 200x70 multiple dwelling. </td> </tr> </tbody> </table> --- ## Reupping Tweets To show how `get_tweets` can start with a `tbl` of tweets and look for new ones, we'll grab 10 `seed_tweets` that are all **older** than an old tweet ID. -- <br> ```r old_tweet_id <- "1084948074588487680" # From Jan 14 seed_tweets <- get_tweets( n_tweets_seed = 10, max_id = old_tweet_id ) nrow(seed_tweets) ## [1] 10 ``` --- ## Reupping Tweets Using `seed_tweets` as an input to the same `get_tweets` function, we check for new tweets, and, if there are any, pull them in. -- ```r full_tweets <- get_tweets(seed_tweets, n_tweets_reup = 5) ## Searching for new tweets. ## 5 new tweet(s) pulled. ``` -- <br> ```r nrow(seed_tweets) ## [1] 10 nrow(full_tweets) ## [1] 15 ``` --- ## Getting Addresses With `pull_addresses` we parse the text of the tweet to pull out borough and street and string them together into an address. ```r get_tweets(max_id = old_tweet_id) %>% * pull_addresses() %>% select(text, street, borough, address) %>% kable() ``` <table> <thead> <tr> <th style="text-align:left;"> text </th> <th style="text-align:left;"> street </th> <th style="text-align:left;"> borough </th> <th style="text-align:left;"> address </th> </tr> </thead> <tbody> <tr> <td style="text-align:left;"> Bronx *66-75-3049* 1449 Commonwealth ave. Attic fire private dwelling. </td> <td style="text-align:left;"> 1449 Commonwealth ave </td> <td style="text-align:left;"> The Bronx </td> <td style="text-align:left;"> 1449 Commonwealth ave, The Bronx </td> </tr> <tr> <td style="text-align:left;"> Manhattan *66-75-0755* 330 E 39 St. Fire in the duct work 3rd floor. 10-77(HiRise Residential). E-16/TL-7 1st due </td> <td style="text-align:left;"> 330 E 39 St </td> <td style="text-align:left;"> Manhattan </td> <td style="text-align:left;"> 330 E 39 St, Manhattan </td> </tr> <tr> <td style="text-align:left;"> Manhattan 10-77* 66-75-2017* 70 Little West St x 2nd Pl. BC01 has a fire on the 7th floor in the laundry area. </td> <td style="text-align:left;"> 70 Little West St x 2nd Pl </td> <td style="text-align:left;"> Manhattan </td> <td style="text-align:left;"> 70 Little West St x 2nd Pl, Manhattan </td> </tr> <tr> <td style="text-align:left;"> Bronx *66-75-2251* 2922 3rd Avenue at Westchester Avenue, Battalion 14 transmitting a 10-75 for a fire on the 4th floor of a 6 story commercial building. Squad 41 First Due </td> <td style="text-align:left;"> 2922 3rd Avenue at Westchester Avenue </td> <td style="text-align:left;"> The Bronx </td> <td style="text-align:left;"> 2922 3rd Avenue at Westchester Avenue, The Bronx </td> </tr> <tr> <td style="text-align:left;"> Brooklyn **77-75-0270** 330 Bushwick Avenue Near McKibbin Street, Fire on the 4th Floor </td> <td style="text-align:left;"> 330 Bushwick Avenue Near McKibbin Street </td> <td style="text-align:left;"> Brooklyn </td> <td style="text-align:left;"> 330 Bushwick Avenue Near McKibbin Street, Brooklyn </td> </tr> <tr> <td style="text-align:left;"> Brooklyn *77-75-0855* 899 Hancock St. Fire top floor 3 story </td> <td style="text-align:left;"> 899 Hancock St </td> <td style="text-align:left;"> Brooklyn </td> <td style="text-align:left;"> 899 Hancock St, Brooklyn </td> </tr> <tr> <td style="text-align:left;"> Brooklyn **77-75-0855** 899 Hancock Street Near Howard Avenue, All hands going to work for fire I’m the top floor </td> <td style="text-align:left;"> 899 Hancock Street Near Howard Avenue </td> <td style="text-align:left;"> Brooklyn </td> <td style="text-align:left;"> 899 Hancock Street Near Howard Avenue, Brooklyn </td> </tr> <tr> <td style="text-align:left;"> Bronx *66-75-3937* 3840 Orloff Av. Fire 4th floor. </td> <td style="text-align:left;"> 3840 Orloff Av </td> <td style="text-align:left;"> The Bronx </td> <td style="text-align:left;"> 3840 Orloff Av, The Bronx </td> </tr> <tr> <td style="text-align:left;"> Staten Island *MVA/PIN* Box 1744- 490 Harold St off Forest Hill Rd. Hurst tool in operation. </td> <td style="text-align:left;"> Box 1744- 490 Harold St off Forest Hill Rd </td> <td style="text-align:left;"> Staten Island </td> <td style="text-align:left;"> Box 1744- 490 Harold St off Forest Hill Rd, Staten Island </td> </tr> <tr> <td style="text-align:left;"> Queens 99-75-6810 111-15 227 St BC-54 using all hands for a fire in a pvt dwelling </td> <td style="text-align:left;"> NA </td> <td style="text-align:left;"> Queens </td> <td style="text-align:left;"> Queens </td> </tr> </tbody> </table> --- ## Getting Lat and Long Last step of the main pipeline! -- **Reverse geocoding** = getting latitude and longitude from an address. The [`ggmap`](https://www.rdocumentation.org/packages/ggmap/versions/2.6.1/topics/geocode) package exposes this feature of the [Google Maps](https://cloud.google.com/maps-platform/) API. -- ```r get_tweets(n_tweets_seed = 5, max_id = old_tweet_id) %>% pull_addresses() %>% * get_lat_long() ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=1449%20Commonwealth%20ave%2C%20The%20Bronx&key=xxx ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=330%20E%2039%20St%2C%20Manhattan&key=xxx ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=70%20Little%20West%20St%20x%202nd%20Pl%2C%20Manhattan&key=xxx ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=2922%203rd%20Avenue%20at%20Westchester%20Avenue%2C%20The%20Bronx&key=xxx ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=330%20Bushwick%20Avenue%20Near%20McKibbin%20Street%2C%20Brooklyn&key=xxx ## # A tibble: 5 x 5 ## address lat long created_at text ## <chr> <dbl> <dbl> <dttm> <chr> ## 1 1449 Commonwealth… 40.8 -73.9 2019-01-14 15:54:18 Bronx *66-75-3049* 14… ## 2 330 E 39 St, Manh… 40.7 -74.0 2019-01-14 13:41:11 Manhattan *66-75-0755… ## 3 70 Little West St… 40.7 -74.0 2019-01-14 12:40:19 Manhattan 10-77* 66-7… ## 4 2922 3rd Avenue a… 40.8 -73.9 2019-01-14 07:06:21 Bronx *66-75-2251* 29… ## 5 330 Bushwick Aven… 40.7 -73.9 2019-01-13 21:27:15 Brooklyn **77-75-0270… ``` --- ## Downstream Analysis Later in the pipeline we'll: `count_fires`, summing up the total number of fires per `lat`-`long` combo <br> ```r count_fires <- function(tbl) { tbl %>% drop_na() %>% count(lat, long) } ``` <br> and plot them on a map (thanks again, `ggmap`) --- ## Downstream Analysis ```r get_map("new york city") %>% ggmap() ## Source : https://maps.googleapis.com/maps/api/staticmap?center=new%20york%20city&zoom=10&size=640x640&scale=2&maptype=terrain&language=en-EN&key=xxx ## Source : https://maps.googleapis.com/maps/api/geocode/json?address=new%20york%20city&key=xxx ``` <!-- --> --- Using 3000 tweets: ```r plot_fire_sums(fire_sums, output_path = NULL) ``` <!-- --> <!-- <p> --> <!-- <img src="./img/fire_sums_plot.png"> --> <!-- </p> --> --- ## Quick Benchmark So where does `drake` really come in handy here? -- The trips to and from Twitter and Google take a while. -- <br> What's the estimate of running the pipeline on a single tweet? -- ```r (our_bench <- bench::mark({ get_tweets(n_tweets_seed = 1) %>% # Hi Twitter pull_addresses() %>% get_lat_long() # Hi Google }) %>% as_tibble() %>% pull(median)) ## [1] 292ms ``` --- ## Quick Benchmark Roughly how many **minutes** would the pipeline take for 3k tweets? (No batch speedups since we're going `rowwise` on each tweet.) <br> ```r (n_mins <- (as.numeric(our_bench) # Returns this in seconds * 3000) # 3k tweets / 60 # 60 seconds in a minute ) ## [1] 14.61218 ``` --- ## Quick Benchmark All our downstream analyses depend on this pipeline. If we tweak some code but `drake` determines we don't need to rerun the pipeline, we will save **15 minutes** of our lives. <br>  <br> And we rest assured we have the most up-to-date data. --- ## Our `drake` Plan We'll set up the `drake` plan and run it for real. An exclusive sneak peek: ```r plan <- drake_plan( seed_fires = get_tweets(), fires = target( command = get_tweets(tbl = seed_fires), # Always look for new tweets trigger = trigger(condition = TRUE) ), # Extract addresses from tweets addresses = pull_addresses(fires), # Send to Google for lat-longs lat_long = get_lat_long(addresses), # Sum up n fires per lat-long combo fire_sums = count_fires(lat_long), time_graph = graph_fire_times(lat_long), plot = plot_fire_sums(fire_sums) ) ``` --- ## Questions so far? ```r devtools::session_info() ## ─ Session info ────────────────────────────────────────────────────────── ## setting value ## version R version 3.5.2 (2018-12-20) ## os macOS Mojave 10.14 ## system x86_64, darwin15.6.0 ## ui RStudio ## language (EN) ## collate en_US.UTF-8 ## ctype en_US.UTF-8 ## tz America/New_York ## date 2019-02-12 ## ## ─ Packages ────────────────────────────────────────────────────────────── ## package * version date lib ## askpass 1.1 2019-01-13 [1] ## assertthat 0.2.0 2017-04-11 [1] ## backports 1.1.3 2018-12-14 [1] ## bench 1.0.1 2018-06-06 [1] ## bindr 0.1.1 2018-03-13 [1] ## bindrcpp * 0.2.2 2018-03-29 [1] ## bitops 1.0-6 2013-08-17 [1] ## broom 0.5.0 2018-07-17 [1] ## callr 3.1.1 2018-12-21 [1] ## cellranger 1.1.0 2016-07-27 [1] ## cli 1.0.1 2018-09-25 [1] ## codetools 0.2-15 2016-10-05 [1] ## colorspace 1.4-0 2019-01-13 [1] ## crayon 1.3.4 2018-12-20 [1] ## curl 3.3 2019-01-10 [1] ## desc 1.2.0 2018-05-01 [1] ## devtools 2.0.1 2018-10-26 [1] ## digest 0.6.18 2018-10-10 [1] ## dplyr * 0.7.8 2018-11-10 [1] ## drake * 6.2.1 2018-12-10 [1] ## emo 0.0.0.9000 2018-07-05 [1] ## emojifont * 0.5.2 2019-01-17 [1] ## evaluate 0.12 2018-10-09 [1] ## forcats * 0.3.0 2018-02-19 [1] ## formatR 1.5 2017-04-25 [1] ## fs * 1.2.6 2018-08-23 [1] ## ggmap * 2.7.904 2019-01-04 [1] ## ggplot2 * 3.1.0 2018-10-25 [1] ## glue * 1.3.0 2018-07-17 [1] ## gtable 0.2.0 2016-02-26 [1] ## haven 1.1.2 2018-06-27 [1] ## here * 0.1 2017-05-28 [1] ## hms 0.4.2 2018-03-10 [1] ## htmltools 0.3.6 2017-04-28 [1] ## httpuv 1.4.5.1 2018-12-18 [1] ## httr 1.4.0 2018-12-11 [1] ## igraph 1.2.2 2018-07-27 [1] ## jpeg 0.1-8 2014-01-23 [1] ## jsonlite 1.6 2018-12-07 [1] ## kableExtra * 1.0.1 2019-01-22 [1] ## knitr * 1.21 2018-12-10 [1] ## labeling 0.3 2014-08-23 [1] ## later 0.7.5 2018-09-18 [1] ## lattice 0.20-38 2018-11-04 [1] ## lazyeval 0.2.1 2017-10-29 [1] ## lubridate 1.7.4 2018-04-11 [1] ## magrittr 1.5 2014-11-22 [1] ## maps * 3.3.0 2018-04-03 [1] ## memoise 1.1.0 2017-04-21 [1] ## mime 0.6 2018-10-05 [1] ## modelr 0.1.2 2018-05-11 [1] ## munsell 0.5.0 2018-06-12 [1] ## nlme 3.1-137 2018-04-07 [1] ## openssl 1.2.1 2019-01-17 [1] ## packrat 0.4.9-10 2018-08-10 [1] ## pillar 1.3.1.9000 2019-02-03 [1] ## pkgbuild 1.0.2 2018-10-16 [1] ## pkgconfig 2.0.2 2018-08-16 [1] ## pkgload 1.0.2 2018-10-29 [1] ## plyr 1.8.4 2016-06-08 [1] ## png 0.1-7 2013-12-03 [1] ## prettyunits 1.0.2 2015-07-13 [1] ## processx 3.2.1 2018-12-05 [1] ## profmem 0.5.0 2018-01-30 [1] ## promises 1.0.1 2018-04-13 [1] ## proto 1.0.0 2016-10-29 [1] ## ps 1.3.0 2018-12-21 [1] ## purrr * 0.3.0 2019-01-27 [1] ## R6 2.3.0 2018-10-04 [1] ## Rcpp 1.0.0 2018-11-07 [1] ## readr * 1.3.1 2018-12-21 [1] ## readxl 1.1.0.9000 2018-07-05 [1] ## remotes 2.0.2 2018-10-30 [1] ## RgoogleMaps 1.4.3 2018-11-07 [1] ## rjson 0.2.20 2018-06-08 [1] ## rlang 0.3.1 2019-01-08 [1] ## rmarkdown 1.11 2018-12-08 [1] ## rprojroot 1.3-2 2018-01-03 [1] ## rstudioapi 0.9.0 2019-01-09 [1] ## rtweet * 0.6.8 2018-09-28 [1] ## rvest 0.3.2 2016-06-17 [1] ## scales 1.0.0 2018-08-09 [1] ## servr 0.11 2018-10-23 [1] ## sessioninfo 1.1.1 2018-11-05 [1] ## showtext 0.6 2019-01-10 [1] ## showtextdb 2.0 2017-09-11 [1] ## storr 1.2.1 2018-10-18 [1] ## stringi 1.2.4 2018-07-20 [1] ## stringr * 1.3.1 2018-05-10 [1] ## sysfonts 0.8 2018-10-11 [1] ## testthat * 2.0.1 2018-10-13 [1] ## tibble * 2.0.1 2019-01-12 [1] ## tidyr * 0.8.2 2018-10-28 [1] ## tidyselect * 0.2.5 2018-10-11 [1] ## tidyverse * 1.2.1 2017-11-14 [1] ## usethis 1.4.0 2018-08-14 [1] ## viridisLite 0.3.0 2018-02-01 [1] ## webshot 0.5.1 2018-09-28 [1] ## withr 2.1.2 2018-03-15 [1] ## xaringan 0.8.6 2019-02-04 [1] ## xfun 0.4 2018-10-23 [1] ## xml2 1.2.0 2018-01-24 [1] ## yaml 2.2.0 2018-07-25 [1] ## source ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.2) ## Github (r-lib/crayon@74bee76) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.1) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## Github (hadley/emo@02a5206) ## Github (GuangchuangYu/emojifont@f7ba3ec) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## Github (dkahle/ggmap@4dfe516) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.1) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.2) ## Github (rstudio/packrat@7d320b1) ## Github (r-lib/pillar@3a54b8d) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.1) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## Github (tidyverse/readxl@be74f8f) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.2) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## Github (yihui/xaringan@0d713d5) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## CRAN (R 3.5.0) ## ## [1] /Library/Frameworks/R.framework/Versions/3.5/Resources/library ``` --- class: inverse ## Live coding time! <br> The `rtweet` package also supports POSTing tweets, so we can test out whether our trigger successfully pulls in new tweet with our own -- <br> ## 🔥 **[`burner account!`](https://twitter.com/didntstartit)** 🔥 <br> -- On to [part 2](https://github.com/aedobbyn/nyc-fires/blob/master/live_code.md) after this message from our sponsor.